Integrated process: Advancing point cloud processing and NURBS modeling

An Exploration of challenges and solutions in bridging workflows, software integration, and open-source tools

Daria Dordina, Dipl.-Ing., M. Sc., GMV, TU Dresden

Daria Dordina studied Architecture at the National Research Moscow State University of Civil Engineering, and Media Architecture at the Bauhaus University Weimar. Daria has experience in architecture and exhibition design and has been involved in the research project FreeForm4BIM since 2021.

Zaqi Fathis, M. Arch, GMV, TU Dresden

Zaqi Fathis holds an M.Arch degree in EmTech from the AA School, UK. He is currently a Research Associate at GMV, where he is involved in the research project FreeForm4BIM. His research interest lies in the intersection between material computation, complex geometry, and digital fabrication.

Cyrill Milkau, M. Sc., Dresden University of Applied Sciences

Cyrill Milkau earned a degree in Geodesy and Geoinformation from the TU Dresden, specializing in laserscanning and photogrammetry. He is currently a software engineer and developer of point cloud processing techniques for the FreeForm4BIM research project.

Daniel Lordick, Prof. Dr.-Ing., Head of GMV, TU Dresden

Daniel Lordick studied Architecture at the TU Berlin and the Carleton University in Ottawa. He holds a doctoral degree from the Karlsruhe Institute of Technology in the field of line geometry and is Professor at the TU Dresden. He is involved in several research projects in the fields of AEC.

Keywords: point cloud processing, surface reconstruction, software integration, workflow automation, industry-academia collaboration

Abstract

The use of 3D scans has witnessed significant growth in recent years. However, extracting geometric information from 3D point clouds is still challenging but essential for building reconstruction and analysis, especially for Building Information Modelling (BIM).

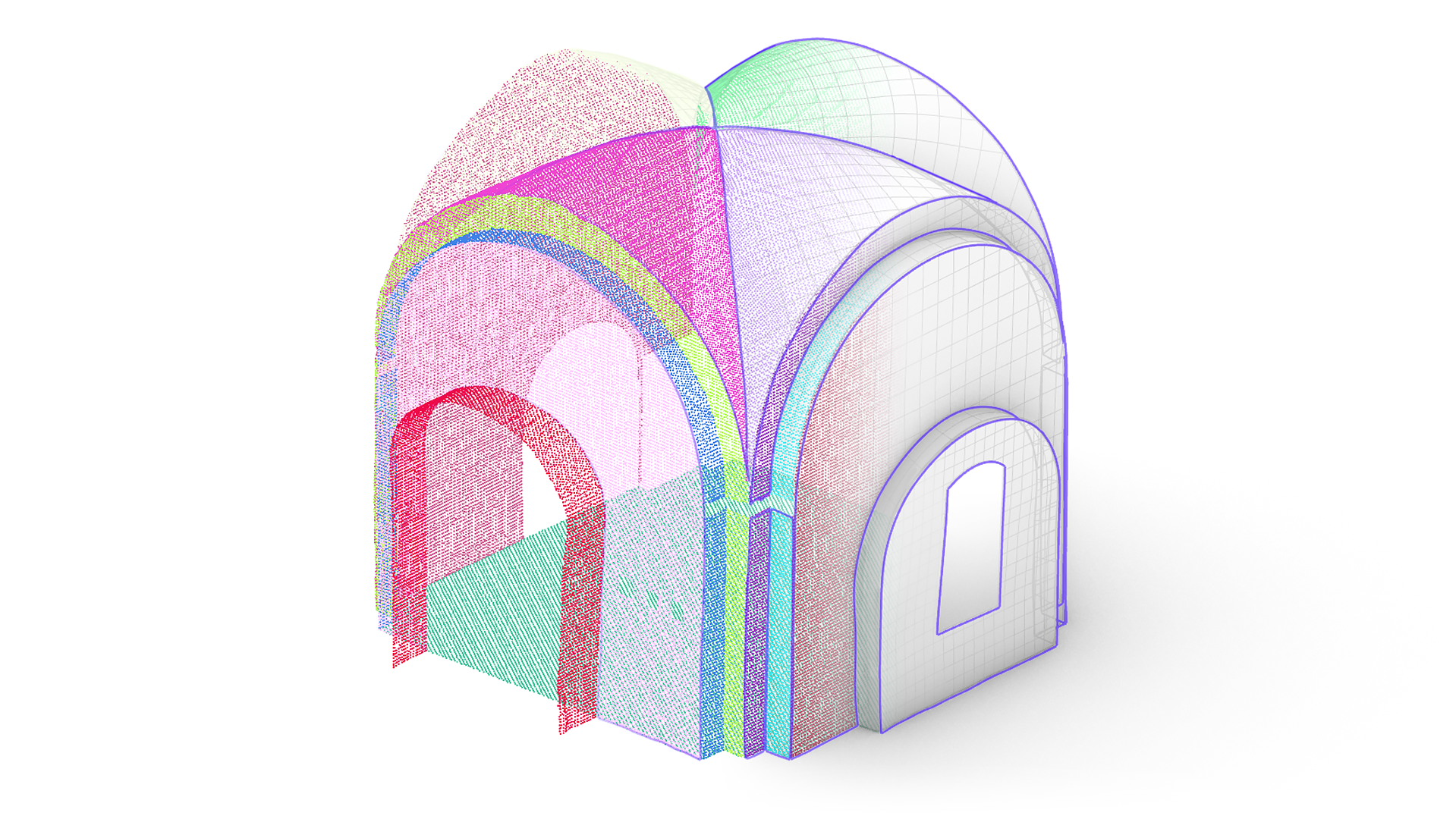

This paper reflects on the research project FreeForm4BIM, a part of the ZIM innovation network twin4BIM. FreeForm4BIM aims to enable the true-to-deformation creation of free-form Non-Uniform Rational B-Spline (NURBS) elements of historic buildings based on point cloud data. We provide an overview of the current state of the art in this research area and furthermore a comprehensive overview of proprietary tools and open-source software (OSS) developed to automate NURBS reverse engineering from 3D scan data. We reflect on technical challenges and put the emphasis on goal-oriented work and interaction between developers, researchers, and users. By sharing our experiences and insight, we aim to contribute to the collective knowledge in this domain and foster collaborative innovation among researchers and practitioners in applied sciences.

Introduction

The project FreeForm4BIM comprises four key actors: two academic, TU Dresden and HTW Dresden; the Berlin-based firm Scan3D GmbH, specializing in 3D scanning and geometry reconstruction; and the firm Fokus GmbH Leipzig, which specializes in software development for construction surveying.

The established approach among project partners involved utilizing a commercial software for a semi-manual segmentation process. Point cloud segments were then imported into Rhinoceros, where NURBS geometry was generated by manually outlined boundary curves and selected point cloud segments. Our research challenge was to explore opportunities to minimize the manual aspect of this workflow. The subsequent development phase aimed to implement the solutions derived from our research into the workflow. Researchers notice the extensive post-processing and cleaning phases as major obstacles in reverse engineering.1 Throughout this project we have been addressing the problem of automation for these phases.2

Right from the beginning, it was evident that we would need to adapt to different environments, as two project partners were accustomed to distinct software platforms. Over the course of the project, it became apparent that we needed to establish a kind of ecosystem of various software tools to create a practical and coherent workflow. Developing such an approach is a multifaceted challenge, addressing a multitude of aspects. Within the presented work, these aspects are structured by a framework that evolved from the (overlapping) perspectives of users, researchers, and developers.

Methodology

User perspective

Users who aim to reconstruct geometries from 3D scan data have high expectations regarding visualization quality and a practical, efficient method of managing intermediate results. We address this by separating computationally intensive tasks from tasks that require direct monitoring and confirmation.

The typical starting point is raw point cloud data from acquisition methods such as terrestrial laser scanning (TLS). The following functionalities need to be available for processing: cloud conversion, merging, filtering, transformation, and segmentation, as surface creation requires meaningful segments to work with. The user needs convenient ways to transfer the results to other applications.

Rather than pursuing a universal method, our approach for the creation of NURBS surfaces focuses on building a comprehensive toolkit of tailored solutions. In the preparation of surface reconstruction, these tools are responsible for processing and structuring partial point cloud datasets. This includes the calculation of geometric features, curve clustering and interpolation, the construction of a graph structure, the generation of meaningful parameterisation data and the creation of NURBS surfaces.

Researcher perspective

As researchers, we mostly rely on available open source software, libraries, and algorithm documentation. Some programs support either the development of plug-ins or the coding of interfaces by offering Software Development Kits (SDK) or similar. This approach has advantages for both the official developer, as the core software may be commercial, but it’s directly customizable within the user’s capabilities.

General point cloud processing steps as named above can be found in a variety of OSS. CloudCompare3 summarizes a variety of point cloud processing techniques and supports most 3D data file formats. MeshLab4 or Instant Meshes,5 as alternatives, primarily concentrate on point-to-mesh processing.

The Point Cloud Library6 (PCL) provides many standalone algorithms to process point clouds in C++. Of particular interest to us is the integrated OpenNURBS7 (ON) toolkit. The latter is specifically designed for exchanging 3D geometries between different applications. Open3D8 is an alternative library for 3D data processing that operates also in Python language. Like PCL, it relies on contributions from the open-source community. Other libraries such as Point Data Abstraction Library9 or the semi open-source LAStools10 primarily focus on higher-level command line interfaces rather than programmatic API.

Intermediate results from point cloud processing are transferred to the Rhinoceros+Grasshopper environment for surface creation. Without extensions, Grasshopper does not support the work with point cloud instances. Therefore we used either the Python script editor in Grasshopper or available plug-ins. Among the latter we can highlight Cockroach,11 Volvox,12 and Tarsier.13 These plug-ins provide access to functions from open-source libraries for point cloud processing. Additionally, other categories of algorithms prove valuable for handling point clouds. These include algorithms for graph structures14 and for the calculation of space-partitioning data structures like RTrees and Kd-Trees (e.g. Lunchbox).15

Developer perspective

Our essential part as developers is to contribute to and ensure the functionality of the infrastructure of any given software that is part of the project.

Point cloud file formats can be classified into standardized (e.g. E57),16 de facto standardized (e.g. LAS/LAZ),17 custom (e.g. PCD),18 as well as manufacturer-based proprietary (e.g. LGS).19 In addition to the ability to deal with different file formats, the internal handling of data varies among different programs. For instance, PCL and Open3D may have varying approaches to file data from the same PCD format, such as considering data storage order, invoking procedures, and internal exchange methods. Furthermore, when integrating OSS into proprietary software, it is crucial to manage these distinctions properly.

We use our partner’s proprietary software metigo MAP20 (specifically its development module metigo IPF) to develop and test our algorithms in an encapsulated environment, providing several integrated tools like geometry visualization or efficient file structuring capabilities so we can focus on the project-specific tasks. Results are primarily transferred via an integrated interface (RhinoCommon plug-in) to Rhinoceros for further processing.

Summarizing our algorithms in external libraries, whether static or dynamic, simplifies codebase management and distinguishes between commercial licenses and open-source licenses. This approach covers all relevant aspects of licensing, including ownership, distribution, and warranty.

The JavaScript Object Notation (JSON) offers flexibility in exchanging both microscopic information like pointwise eigenvalues and macroscopic features such as the segment neighborhood. It is used as a second way to transfer information between the development module IPF and Rhinoceros.

For the surface creation part, the goal is to create a set of Python-based blocks compiled as a Grasshopper plug-in to benefit from the advantage of code centralization. This centralization ensures that the code remains in a single source, simplifying maintenance and facilitating code sharing within the Grasshopper ecosystem.

Integrating Python libraries directly within Grasshopper presents technical challenges primarily because Grasshopper utilizes IronPython as its scripting language, while many Python libraries are designed to function with CPython, such as Open3d, Numpy, and Scipy. As a workaround, we tried to access the libraries from GH Python Remote21 and Hops.22 While these approaches offer potential solutions for integrating Python functionality into Grasshopper, they may introduce complexities and challenges during the testing and implementation phases for users. These alternative approaches require careful consideration and testing to ensure seamless integration with Grasshopper’s environment, as well as to address any compatibility issues that may arise when bridging the gap between the Grasshopper and Python ecosystems. Nevertheless this problem is resolved in the recent Rhinoceros 8 release (still beta version).

Software testing is vital with readily available guidelines23 and standards.24 Language-based unit tests are developed in order to maintain functionlity during development. Performance measures such as runtime evaluation are implemented directly in the source code. Performing unit tests in Grasshopper is not integrated by default. However, plug-ins25 exist to address that issue. Setting up a continuous integration pipeline for the automatic testing of Grasshopper plug-ins is also not straightforward. While most open source automation servers do provide the necessary infrastructure, the complexity of creation and maintenance may overwhelm the average Rhinoceros user or developer. In our case, integration testing of developed modules is based on the ability to share results via JSON files.

Collaborative coding requires the use of version control systems, like Git, and web services, such as GitHub. These tools are indispensable for efficient team collaboration on software development projects in text-based programming languages like C++ or Python. Some services, like GitLab, offer the option of private hosting on local servers, overcoming any data security concerns. However, the binary file format of Grasshopper is not well suited to be maintained by version control systems due to the inability of change tracking and its relatively large size. Whilst the latter issue can be met using auxiliary tools like Git Large File Storage, the main reason for not using Git on the visual scripting of that part is the former.

Reflection

In summary, our current situation can be outlined as follows:

We are actively engaged in building a medium-sized commercial software solution, Metigo, leveraging open libraries. While the core development remains proprietary, we are sharing experimental results from our work in conference reports. Simultaneously, we are developing components for commercial large-sized software, Rhinoceros+Grasshopper, using open libraries. Our plan is to package these components as a Grasshopper plug-in after the project is complete, and we intend to share this work publicly. Moreover, we are exploring ways to connect these two software environments using JSON and the ON library.

Depending on the situation, commercial users may prefer comprehensive software suites over single applications, due to their ability to provide uniformity, interoperability, and consistency in terms of graphical user interfaces, terminology, and documentation. However, this benefit is frequently accompanied by developer dependency, functionality overhead, and financial expense. An advantage of medium-sized companies is a well-balanced feedback loop from customer needs into the development process of the delivered software. The metigo IPF module was intentionally designed to expand this loop to the research community, leading to multiple collaborations with academic partners in the past.

Regarding the dissemination of our research results, we face the challenge of finding a balance between the commercial interests of our industry partners and the imperative of openness, especially when presenting a novel workflow that can reduce processing costs in a rapidly emerging industry. Our proposed solution to this issue is not to publish the workflow itself but rather to share a collection of basic tools developed during the research, thereby preserving the commercial confidentiality of the workflow while contributing valuable resources to the broader community.

Conclusion

In this article, we have outlined the essential perspectives involved in the FreeForm4BIM project, a collaborative effort among academic institutions and industry experts. Given the diversity of software environments used by project partners, we highlighted the importance of creating an ecosystem of software tools that contributes to a practical and coherent workflow.

In the paper, we address the three main challenges of our project: the initial challenge of automating manual workflows, the problem of seamless integration of the software ecosystem, and the issue of balancing proprietary tools and OSS. Ultimately, FreeForm4BIM serves as an example of the potential of collaboration between academia and industry by leveraging open-source tools and developing new solutions to advance the field of point cloud processing and NURBS modeling. It promotes a feedback loop between developers, users, and researchers to drive progress in this ever-evolving domain.

Acknowledgements

This research was funded by the Federal Ministry for Economic Affairs and Climate Action based on a resolution of the German Bundestag. We thank our industrial partner Scan3D GmbH and Fokus GmbH Leipzig for providing us with access to point cloud data and their engineers for preparing data and generating ground truth segmentations and NURBS surfaces used as a reference in our experiments.

References

- Fabrizio Banfi, HBIM GENERATION: EXTENDING GEOMETRIC PRIMITIVES AND BIM MODELLING TOOLS FOR HERITAGE STRUCTURES AND COMPLEX VAULTED SYSTEMS ( ISPRS – International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences, 2019), XLII-2/W15 (August): 139-148. ↩︎

Daria Dordina, Cyrill Milkau, Zlata Tošić, Daniel Lordick, and Danilo Schneider, Point Cloud to True-to-Deformation free-form NURBS ( Advances in Architectural Geometry 2023), 125-137.↩︎CloudCompare (version 2.12) [GPL software]. (2022). Retrieved from http://www.cloudcompare.org/↩︎P. Cignoni, M. Callieri, M. Corsini, M. Dellepiane, F. Ganovelli, G. Ranzuglia, MeshLab: an Open-Source Mesh Processing Tool (Sixth Eurographics Italian Chapter Conference, 2008), 129-136↩︎- Wenzel Jakob, Marco Tarini, Daniele Panozzo, Olga Sorkine-Hornung, Instant Field-Aligned Meshes, ACM Transactions on Graphics (Proceedings of SIGGRAPH Asia 2015) ↩︎

- Rusu, Radu B., and Steve Cousins, 3D is here: Point Cloud Library (PCL), ( Proceedings of the IEEE International Conference on Robotics Automation, 2011), 1-4. ↩︎

- https://www.rhino3d.com/de/features/developer/opennurbs/ ↩︎

- Zhou, Qian-Yi, and Park, Jaeski, and Koltun, Vladlen, 2018. Open3D: A Modern Library for 3D Data Processing, 2018 ↩︎

PDAL Contributors, 2022. PDAL Point Data Abstraction Library. https://doi.org/10.5281/zenodo.2616780↩︎- https://rapidlasso.de/ ↩︎

Petras Vestartas and Andrea Settimi, Cockroach: A Plug-in for Point Cloud Post-Processing and Meshing in Rhino Environment ( EPFL ENAC ICC IBOIS, 2020), https://github.com/9and3/Cockroach↩︎- https://www.food4rhino.com/en/app/volvox ↩︎

- https://www.food4rhino.com/en/app/tarsier ↩︎

- https://www.food4rhino.com/en/app/leafvein ↩︎

- https://www.food4rhino.com/en/app/lunchbox ↩︎

- ASTM E2807-11 Standard Specification for 3D Imaging Data Exchange, Version 1.0 ↩︎

- ASPRS LAS 1.4 Format Specification R15 July 9 2019 ↩︎

- https://pcl.readthedocs.io/projects/tutorials/en/master/pcd_file_format.html#pcd-file-format ↩︎

- https://rcdocs.leica-geosystems.com/cyclone-register-360/latest/lgs-files ↩︎

- https://www.fokus-gmbh-leipzig.de/metigo_map-50_1.php?lang=de ↩︎

- https://github.com/pilcru/ghpythonremote ↩︎

- https://developer.rhino3d.com/guides/compute/hops-component/ ↩︎

- https://insights.sei.cmu.edu/blog/a-taxonomy-of-testing/ ↩︎

- IEEE Standard for Software and System Test Documentation ( IEEE Std 829-2008), 1-150. ↩︎

- https://www.food4rhino.com/en/app/brontosaurus ↩︎